It’s becoming less of a secret that secretive computer algorithms are affecting our lives. As summarized in a recent Guardian article, algorithms are being associated with (or blamed for) racially biased search results, disastrous financial decisions during the 2008 crisis, and mass surveillance of civilian populations. The hidden nature of algorithms also enables flat-out illegal behavior, such as Uber’s ‘Grayball’ system to avoid giving rides to potential law enforcement, or VW’s software to cheat on emissions tests.

The proposed solution for algorithmic bad behavior is usually more transparency. One thought is that algorithms with the potential for harm should be required to submit evidence of their fair and legal decision-making. There could even be a “National Algorithms Safety Board” to investigate cases of algorithmic harm, but even advocates would agree that algorithmic fairness enforcement is a big ask.

As we talk about in the book, technologies that connect people (and businesses) tend to gravitate towards using algorithms to choose what people see. Google search is the most famous example, but Facebook, Twitter, Amazon, and Airbnb have all moved towards algorithmic search results and news feeds, rather than rely on criteria selected by users. The official reason for doing so is usually to provide a ‘better customer experience’, though a more accurate description would be to provide a ‘better business model experience’ for the technology company in the middle of the interaction. Better business model results depend on customer cooperation, of course, but also on satisfying the needs of many other groups such as investors, regulators, and advertisers.

(Or, using the book’s framework, secretive algorithms are one of the most important mediation choices that implements a business model, or the way that value created by technology is captured and shared. The success of the business model also depends on the mobilization, or ongoing cooperation, of many different groups with partially overlapping interests.)

Calls for transparency are to be encouraged, but as long as technology business models are rewarded for experimenting with, and evolving, their secretive algorithms, the trend of increasingly momentous digital opacity should continue. We could ask legal systems to help control this by imposing massive fines for illegal behavior. The problem is, as the article points out, these algorithmic methods are such powerful profit generators that a company like Alphabet (Google’s parent) can build in the occasional billion dollar legal fine as a cost of doing business. The more fundamental issue with legal control is that digital technologies create new situations that don’t fit exactly with previous law, constantly exposing legal ambiguities that aggressive innovators can exploit.

If technology business models are to be changed, it will undoubtedly involve law enforcement and regulators. But change will probably require a more multifaceted set of pressures from business competitors, new technologies, new sources of funding, customer demands, watchdogs, and a new sense of professional obligation from the geeks that build these things.

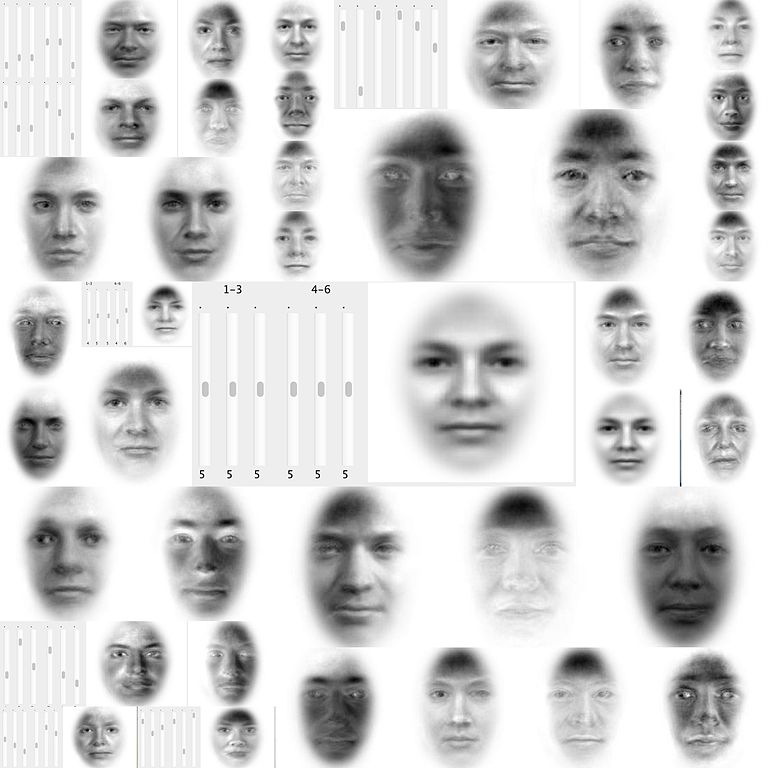

Image credit: wikimedia commons.